|

Getting your Trinity Audio player ready...

|

In the evolving world of scientific, technical, and medical (STM) publishing, artificial intelligence (AI) has become a transformative force, reshaping workflows and enhancing productivity. From manuscript submission to peer review and final publication, AI tools are revolutionizing every aspect of the publishing process. However, as we embrace these technological advancements, it remains crucial to preserve the human touch that upholds the quality and integrity of STM publications. This blog explores how to balance AI efficiency with human insight, maintaining the core elements of scholarly publishing.

In the evolving world of scientific, technical, and medical (STM) publishing, artificial intelligence (AI) has become a transformative force, reshaping workflows and enhancing productivity. From manuscript submission to peer review and final publication, AI tools are revolutionizing every aspect of the publishing process. However, as we embrace these technological advancements, it remains crucial to preserve the human touch that upholds the quality and integrity of STM publications. This blog explores how to balance AI efficiency with human insight, maintaining the core elements of scholarly publishing.

Â

The Rise of AI in STM Publishing

AI has introduced numerous benefits to STM publishing. Machine learning algorithms can process extensive data to uncover trends, predict peer review outcomes, and even aid in writing and editing. Tools like Grammarly and Copy scape enhance manuscript quality by catching grammatical errors and ensuring originality. AI-driven recommendation systems suggest relevant articles, which can streamline literature reviews for researchers. Despite these advancements, the human touch remains indispensable. While AI can manage data and provide recommendations, it lacks the nuanced understanding and contextual insight that human experts offer. For STM publications, where accuracy, originality, and relevance are essential, integrating AI with human oversight is crucial.

Navigating the Key Trends in STM Publishing

This year’s STM publishing trends emphasize the integration of human expertise with AI capabilities. Here are the central themes shaping this year’s narrative:

Â

-

Collaboration Between Humans and Machines

Generative AI presents unique opportunities and challenges for STM publishing. One of the key concerns is whether AI could compromise the scholarly record and disseminate false information. Conversely, there is potential for AI to support the delivery of reliable research. As we look towards 2028, it is essential to explore how humans and machines will collaborate to curate and maintain the scholarly record.

To address these concerns, a careful approach is needed to harness AI’s potential while preserving ethical standards. AI can enhance efficiency in reviewing and editing processes, but human oversight is essential to ensure accuracy and integrity. Human experts must continuously evaluate and refine AI systems to mitigate risks and uphold scholarly standards.

-

Ensuring Credibility and Trustworthiness

As AI and human interactions increasingly influence the management of the scholarly record, determining the credibility of sources becomes vital. The traditional peer review process, while foundational, can be enhanced with automated checks to improve efficiency and accuracy. This year’s trends highlight the need to maintain trust and integrity in scientific communication through innovative workflows.

Automated systems can help detect inconsistencies and potential errors in manuscripts, but they must be complemented by human judgment to ensure the reliability of the information. The challenge is to navigate the complexities of trust and credibility in an AI-influenced landscape. Maintaining the integrity of scientific communication requires a balance between automated processes and critical human evaluation.

Â

-

Maintaining Cohesion Amidst Challenges

Malicious actors, such as paper mills, present a significant threat by exploiting technology to spread misinformation and disinformation. Additionally, geopolitical tensions can lead to fragmented research developments, impacting the global scientific community. In this challenging environment, the key question is whether trust, stability, and cohesion can be sustained.

Fostering trust and stability is crucial to preserving the integrity and collaborative spirit of the research community. AI tools can help identify fraudulent activities and ensure adherence to publication standards, but human expertise is vital in maintaining cohesion and addressing broader geopolitical and ethical challenges.

Â

Embracing a Hybrid Approach to Publishing

The integration of AI in STM publishing offers numerous advantages, but it must be complemented by human judgment to ensure a robust and ethical publishing process. Here are some areas where a hybrid approach of AI and human intelligence can make a significant impact:

-

Enhancing Peer Review

AI can facilitate the peer review process by matching manuscripts with suitable reviewers based on their expertise and previous work. However, the review itself must be conducted by human experts who can provide in-depth analysis and feedback. Combining AI-driven reviewer selection with human evaluation ensures a thorough review process that upholds academic standards and improves the quality of published research.

-

Refining Editing Processes

AI-powered editing tools can identify grammatical errors, suggest improvements, and streamline formatting tasks. Nevertheless, human editors must oversee these suggestions to ensure they align with the manuscript’s intended tone and style. This hybrid approach allows for a more refined editing process that maintains the author’s voice while enhancing readability and clarity.

Â

-

Supporting Ethical Oversight

AI systems can assist in monitoring compliance with ethical guidelines and detecting potential conflicts of interest. However, human reviewers play a critical role in evaluating the ethical implications of research and ensuring adherence to ethical standards. A hybrid approach ensures that ethical considerations are thoroughly addressed, preserving the credibility and integrity of scientific communication.

Preserving the Human Touch in AI-Driven STM Publication: Navigating Potential Pitfalls

Â

In the rapidly evolving world of scientific, technical, and medical (STM) publishing, artificial intelligence (AI) has become a transformative force, revolutionizing workflows and enhancing productivity. From manuscript submission to peer review and final publication, AI tools are reshaping every aspect of the publishing process. However, while AI offers numerous benefits, it is crucial to address the potential negative impacts that could undermine the quality and integrity of STM publications. This blog explores both the advantages and the potential pitfalls of AI in STM publishing and emphasizes the importance of preserving the human touch.

Â

The Rise of AI in STM Publishing

Â

AI has introduced many advantages to STM publishing. Machine learning algorithms can process extensive data to uncover trends, predict peer review outcomes, and assist in writing and editing. Tools like Grammarly and Copyscape enhance manuscript quality by detecting grammatical errors and ensuring originality. AI-driven recommendation systems streamline literature reviews by suggesting relevant articles.

However, despite these advancements, AI’s integration into STM publishing is not without risks. While AI can handle data processing and provide recommendations, it lacks the nuanced understanding and contextual insight that human experts offer. This imbalance raises concerns about accuracy, originality, and the potential for AI to adversely affect scholarly communication.

Â

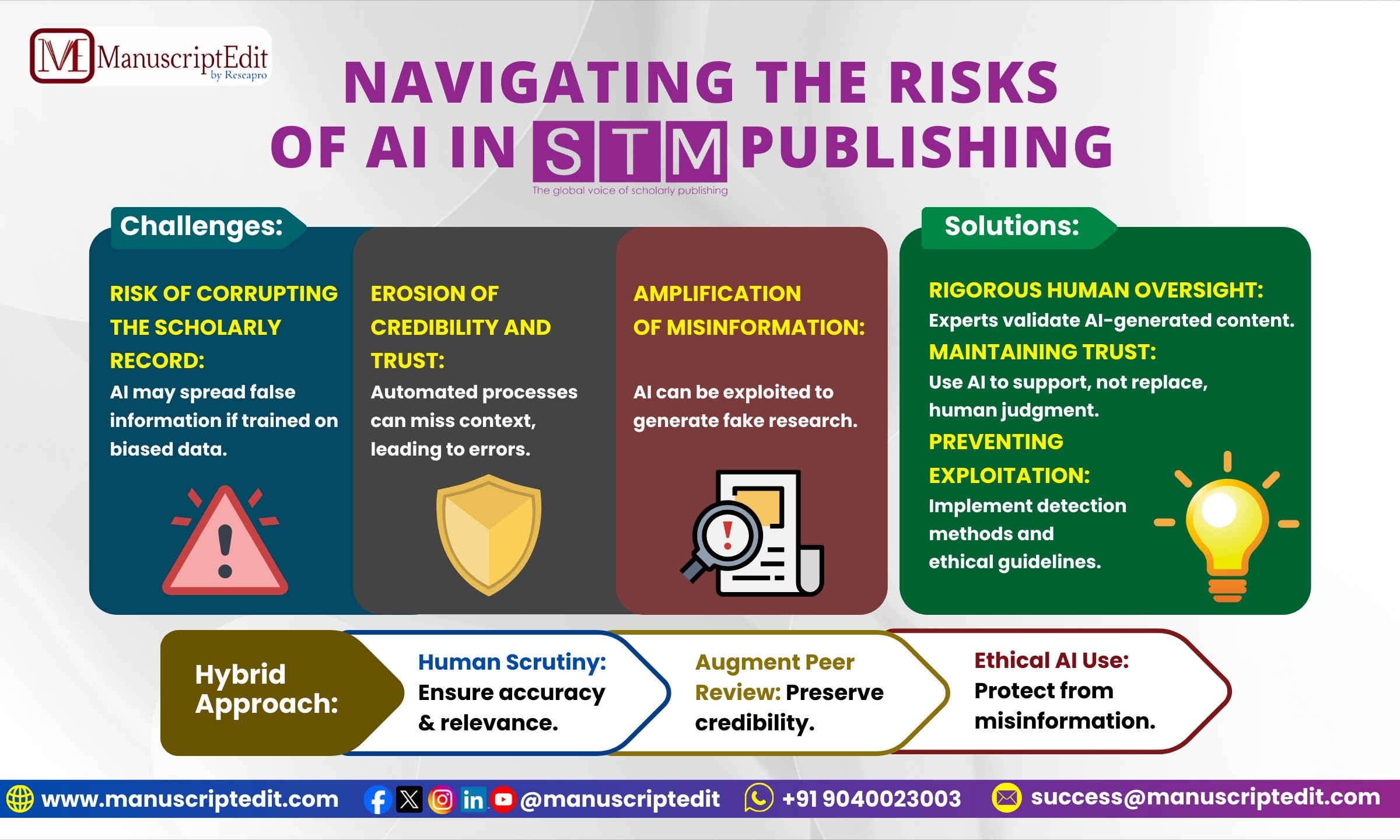

Potential Negative Impacts of AI in STM Publishing

Potential Negative Impacts of AI in STM Publishing

Â

-

Risk of Corrupting the Scholarly Record

Â

Generative AI has introduced significant opportunities, but it also poses risks to the scholarly record. One major concern is that AI could spread false information or inadvertently corrupt research findings. For example, if AI systems are trained on biased or incomplete datasets, they might propagate misinformation or produce flawed conclusions. This risk is particularly concerning as AI-generated content becomes more prevalent in scholarly publications. Ensuring that AI-enhanced processes do not compromise the integrity of research requires rigorous human oversight and validation.

Â

-

Erosion of Credibility and Trust

Â

As AI and human interactions increasingly influence the management of the scholarly record, ensuring credibility and trustworthiness becomes critical. AI’s automated processes can sometimes overlook context and subtleties, leading to potential errors or misinterpretations in research. While AI tools can assist in detecting inconsistencies, they cannot replace the deep contextual understanding that human experts provide. The reliance on AI for these tasks risks undermining the traditional peer review process and eroding trust in scientific communication if not carefully managed.

Â

-

Amplification of Misinformation

Â

Malicious actors, such as paper mills, can exploit AI technology to spread misinformation and disinformation. AI tools can be used to generate fake research or manipulate data, potentially misleading researchers and undermining the scientific community’s integrity. Additionally, geopolitical tensions and fragmented research developments can be exacerbated by AI’s ability to rapidly disseminate both accurate and inaccurate information. Addressing these challenges requires a balanced approach that combines AI capabilities with vigilant human oversight.

Â

Addressing the Challenges: A Hybrid Approach

Â

While AI presents potential risks, it is essential to balance its advantages with human expertise to mitigate these challenges. Here’s how a hybrid approach can address the negative impacts of AI:

Â

-

Ensuring Rigorous Human Oversight

Â

To prevent the corruption of the scholarly record, AI systems must be complemented by rigorous human oversight. Experts need to scrutinize AI-generated content for accuracy and relevance, ensuring that it adheres to established scientific standards. This oversight is crucial in preventing the spread of misinformation and maintaining the integrity of research publications.

Â

-

Maintaining Trust and Integrity

Â

To preserve credibility and trust in scientific communication, AI tools must be used to augment—not replace—human judgment. While AI can assist in detecting errors and inconsistencies, human reviewers must evaluate the context and implications of research findings. This approach ensures that automated processes do not undermine the traditional peer review system but rather enhance it.

Â

-

Preventing the Exploitation of AI

Â

Combating the misuse of AI for generating fake research or manipulating data requires a multi-faceted approach. This includes developing advanced detection methods to identify fraudulent activities and implementing stringent ethical guidelines for AI use in research. Human experts must actively monitor and address potential abuses of AI technology to protect the scientific community from misinformation.

Â

Shaping the Future with Caution and Collaboration

Â

As AI continues to influence STM publishing, understanding its potential pitfalls is crucial for maintaining the quality and integrity of scholarly communication. Here are some strategies for navigating these challenges:

Â

-

Enhancing AI Transparency

Â

Transparency in AI systems is vital to ensure their ethical use in research. Researchers and publishers should be informed about how AI tools operate, including their limitations and potential biases. This transparency helps users make informed decisions and enhances the credibility of AI-assisted processes.

-

Fostering Collaboration

Â

Collaboration between AI developers, researchers, and publishers is essential for addressing the challenges posed by AI in STM publishing. By working together, stakeholders can develop effective strategies for integrating AI while safeguarding research integrity. This collaboration also helps identify and address potential risks before they become significant issues.

Â

-

Investing in Ethical AI Development

Â

Investing in the ethical development of AI tools is crucial for minimizing their negative impacts. This includes creating algorithms that prioritize accuracy, transparency, and fairness. Ethical AI development helps ensure that technology serves to enhance—not undermine—the quality of scientific research.

Â

Conclusion

Â

AI-driven tools are transforming STM publishing by enhancing efficiency and streamlining various processes. However, it is crucial to address the potential negative impacts of AI, including risks to the scholarly record, erosion of credibility, and the amplification of misinformation. By integrating AI with rigorous human oversight, maintaining trust and integrity, and preventing the exploitation of technology, the publishing industry can navigate these challenges effectively.

The future of STM publishing requires a balanced approach that leverages the strengths of AI while preserving the essential human elements of scholarly communication. Through a collaborative and cautious approach, the research community can continue to advance knowledge while safeguarding the quality and integrity of scientific publications.